Blog-driven development - Auto-mocking for Bluetooth / BLE

Published: 2021-04-29 by Lars work processtesttools

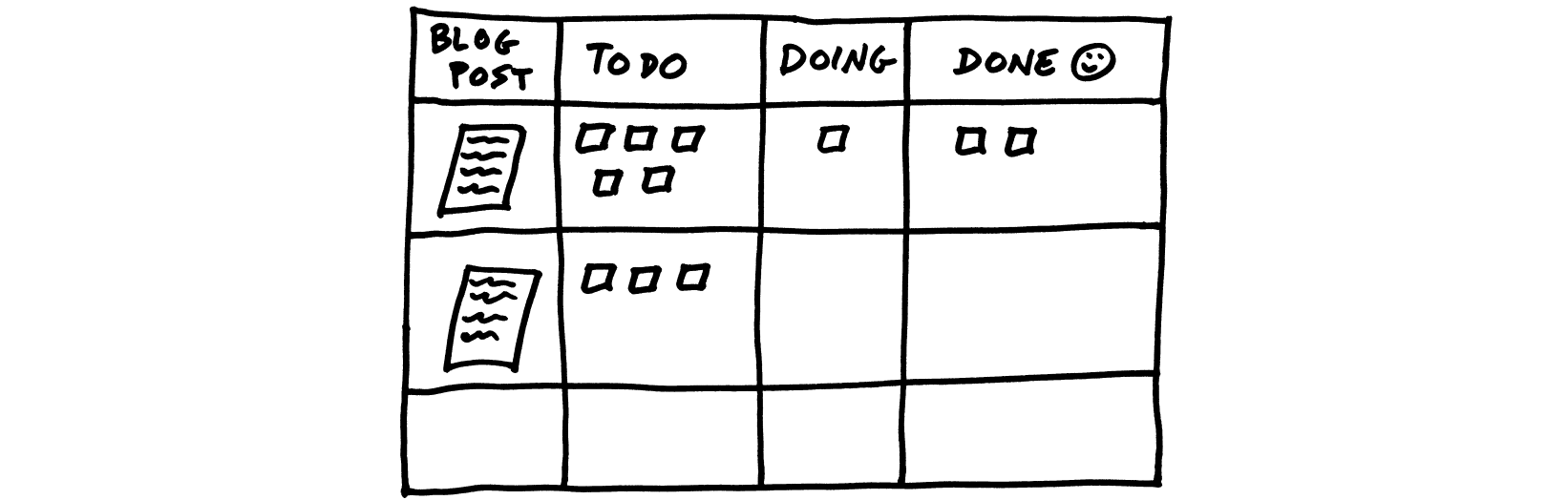

This blog post demonstrates an example of blog-driven development. Before writing code for a new feature, I want to clarify in my mind how the feature should behave for users, and a good way to focus my thought process is to write the blog post announcing the feature.

Below you will find the first draft version of a blog post describing a new testing tool that I am about to start building.

NOTE: The tool has now been released, you may want to read the announcement

Auto-mocking for Bluetooth / BLE

In this blog post I announce a new tool for auto-mocking Bluetooth Low Energy (BLE) traffic in unit tests. This will allow us to do integration testing of mobile apps for Bluetooth devices with the speed and robustness of ordinary unit tests, potentially allowing for sub-second test durations.

The target audience will already have some experience with automated testing of mobile apps, and you are probably already frustrated by the slowness and fragility of ordinary end-to-end testing, and equally frustrated by manual mocks in unit tests frequently becoming outdated.

Background

I help teams build software products using continuous delivery. Having adequate automated test coverage is essential to making continuous delivery work in practice, and making testing fast is essential for developer productivity. You can find my earlier blog posts and talks about auto-mocking here:

- Lynhurtige end-to-end tests - foredrag (in Danish)

- Don't let your mocks lie to you!

- Super fast end-to-end tests

- Unit test your service integration layer

What I call auto-mocking is when developers run their UI tests with pre-recorded mocks from actual interactions between UI and external services or devices. This contrasts manual mocking where developers write and maintain the code to mock the interactions manually.

After working solely on web-based products, lately I have been working on a mobile app with a company producing a Bluetooth-enabled device. While good tools exist for auto-mocking HTTP traffic (such as PollyJS and Hoverfly), we were not able to find an adequate solution for BLE traffic. Based on my experience with the HTTP-based auto-mocking tools, I have built a tool for auto-mocking BLE traffic in React Native applications.

This tool enables full integration testing of the React Native app and the Bluetooth device. And at the same time, the tests perform several orders of magnitude better than ordinary end-to-end testing.

Goals

The tool is meant for mobile app developers. I want the developer experience to be great: tests should be easy to write, fast to run, and the tool itself should integrate well into both normal development workflows and continuous integration pipelines.

Specifically I want the tool to do auto-mocking: it should allow developers to capture and save BLE traffic from the app running on a real phone interacting with a real device. These capture files can then be used during normal development to mock BLE traffic when running app tests.

Some potential capabilities are currently out of scope for this tool:

You can speed up testing by replacing almost all of your current end-to-end tests with unit tests and auto-mocking. However, you probably still want a few end-to-end tests for smoke testing purposes.

Also you will write separate tests for capture and for app testing. I have not attempted to design the tool in a way where the same test can be used for both purposes.

Demo

Take a look at this demo project, which shows the tool in action (TBD). The project is a standard React Native project, using the react-native-ble-plx library for BLE communication and Jest for testing.

Here is a a sample test case for the main screen of the app, where we expect a number of nearby Bluetooth devices to show up with their battery and volume levels.

describe("App", () => {

it("should display list of BLE devices", async () => {

// when: render the app

const { getByA11yLabel } = render(<App />);

// then: initially no devices are displayed

expect(getByA11yLabel("BLE device list")).toHaveTextContent("");

// when: simulating some BLE traffic

bleMock.playUntil("scanned");

// then: eventually the scanned devices are displayed

await waitFor(() =>

expect(getByA11yLabel("BLE device list")).toHaveTextContent(

"SomeDeviceName, SomeOtherName"

)

);

});

});This test runs in less than a second, as you can see from this output.

$ npm test

> jest

PASS __tests__/App-test.js

App

√ display list of BLE devices (670 ms)

Test Suites: 1 passed, 1 total

Tests: 1 passed, 1 total

Snapshots: 0 total

Time: 3.315 s, estimated 4 s

Ran all test suites.This test uses a capture file that looks like this. Note how we use bleMock.playUntil in the test above in order to reach that label in the capture file.

onDeviceScan(null, { name: "SomeDeviceName" });

onDeviceScan(null, { name: "SomeOtherName" });

label("scanned");The capture file is generated when running the capture test, which looks like this:

// TBDImplementation details

Architecture

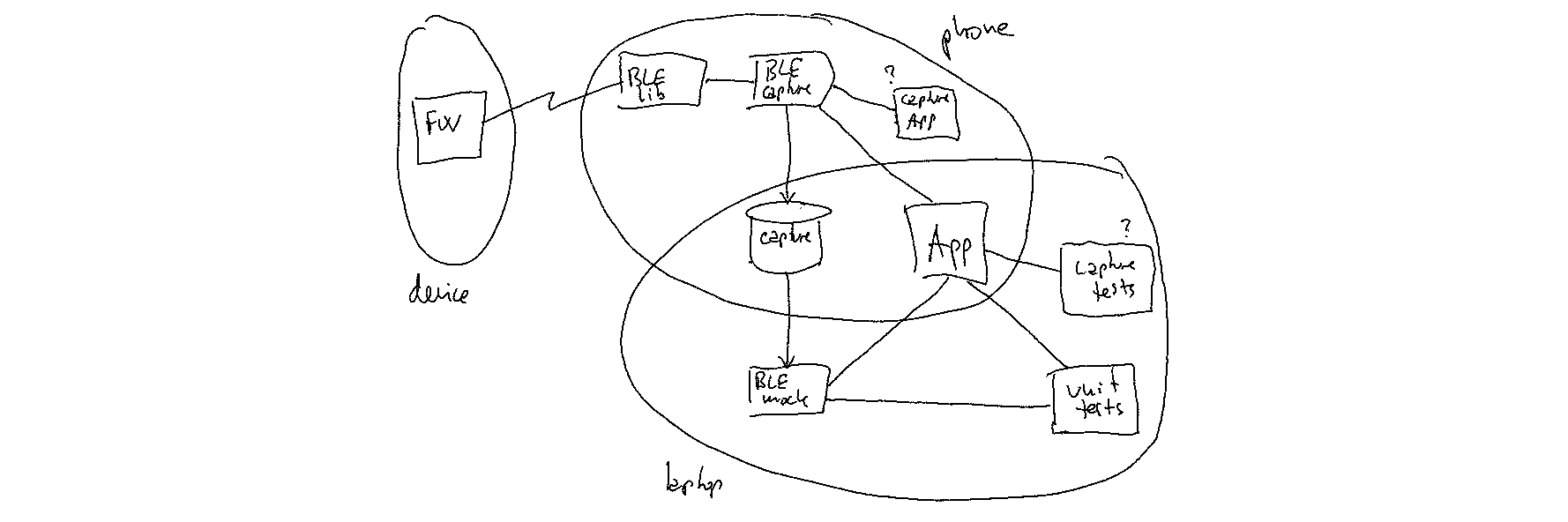

Auto-mocking is used in two distinct modes: 1) The developer captures real BLE traffic on real devices, by running capture tests against the app on a real phone and store the capture files as build artifacts. This can be done quite infrequently. And then 2) the developer use these capture files to auto-mock BLE traffic during unit testing locally on the developer machine. As this is very fast, it can be done as frequently as needed. Here is an illustration of these two modes:

Capture format

I had to decide on a file format for the capture file. Since these files are normally used by the same auto-mocking tool in its two modes, I was free to choose a file format that works well for this purpose. Some level of interoperability with other tools would be an added benefit.

Auto-mocking tools for HTTP traffic can use the W3C standard HAR file format, also used by browser debugging tools to save traffic. For instance, PollyJS uses the HAR file format, while HoverFly uses a proprietary format.

For BLE traffic I could choose the Bluetooth HCI log format as produced by Android and consumable by tools such a Wireshark. However, this format is quite low-level and expensive to implement correctly. Instead I have chosen a file format that closely mimics the API of the react-native-ble-plx module, which made the tool easier to implement.

Capture mechanism

While capture tests run on a real phone, the tool needs to store the capture file as an artifact on the developer machine. So after completing a capture, the tool has to send the capture file from the phone to the developer machine. It can do that in various ways, for example using the phone file system, or sending it to a server running on the developer machine. However, since the phone will already be attached to the developer machine running the capture test, the easiest approach is to utilize the system logging capabilities of phones (console.log and similar in React Native) which can be collected via tools such as adb logcat on Android and idevicesyslog on iOS.

Make the test control the mocking

Auto-mocking tools for HTTP traffic can rely on the inherent request-response nature of HTTP traffic: When the app sends a request, the auto-mocking tool will look in the capture file for a matching request and will mock the corresponding response.

Since BLE traffic is inherently bi-directional, the approach needs to be a bit different. Just like the test has control over the user actions during the scenario being tested, the test must also control the device actions. The tool uses two mechanisms to accomplish this: First, the capture file is sequential - traffic is being simulated strictly in the order it was captured. Secondly, interesting moments can be labelled during the capture, allowing the test to simulate all traffic up to a specified label. You can see how the label scanned is referenced in the "Demo" section above.